Computer programs might not be as objective and unbiased as they seem, according to new research. The software that process job applications, housing loan decisions, and other data that impacts people’s lives might be picking up biases along the way.

Computer programs might not be as objective and unbiased as they seem, according to new research. The software that process job applications, housing loan decisions, and other data that impacts people’s lives might be picking up biases along the way.

It’s common practice for companies to use software to sort through job applications and score applicants based on numbers like grade point average or years or experience; some programs even search for keywords related to skills, experience, or goals. In theory, these programs don’t just weed out unqualified applicants, they also keep human biases about things like race and gender out of the process.

Recently, there’s been discussion of whether these selection algorithms might be learning how to be biased. Many of the programs used to screen job applications are what computer scientists call machine-learning algorithms, which are good at detecting and learning patterns of behavior. Amazon uses machine-learning algorithms to learn your shopping habits and recommend products; Netflix uses them, too.

Some researchers are concerned that resume scanning software may be using applicant data to make generalizations that end up inadvertently mimicking humans’ discrimination based on race or gender.

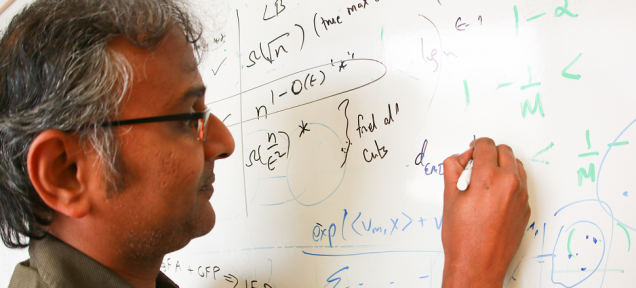

“The irony is that the more we design artificial intelligence technology that successfully mimics humans, the more that A.I. is learning in a way that we do, with all of our biases and limitation,” said University of Utah computer science researcher Suresh Venkatasubramanian in a recent statement.

Along with his colleagues from the University of Utah, the University of Arizona, and Haverford College in Connecticut, Venkatasubramanian found a way to test programs for accidentally learned bias, and then shuffle the data to keep biased decisions from happening. They presented their findings at the Association for Computing Machinery’s 21st annual Conference on Knowledge Discovery and Data Mining last week in Sydney, Australia.

The researchers are using machine-learning algorithms to keep tabs on other machine-learning algorithms. Venkatasubramanian and his team’s software tests whether it’s possible to accurately predict applicants’ race or gender based on the data being analyzed by a resume scanning program, which might include things like school names, addresses, or even the applicant names. If the answer is yes, that might be causing the resume scanner to make generalizations that unfairly discriminate against applicants based on demographics.

In that case, according to Venkatasubramanian and his team, the solution is to resdistribute the data in the resume-scanning program so that the algorithm can’t see the data that led to the bias.

Contact the author at

Top image: Vincent Horiuchi via University of Utah